Tuning Vertex AI Models and Using Caching Strategies for Inference

In this article, we will delve into the process of fine-tuning a language model using Google's Vertex AI, utilizing data extracted from the Stack Overflow dataset available through BigQuery. We will also look at caching strategies for improving model inference.

To provide some context:

Vertex AI: Google's managed machine learning platform designed for building, deploying, and scaling models. It offers a few pre-trained models along with customization options

BigQuery: Google's data warehouse solution that enables SQL queries over massive datasets

This article will lead you through the following steps:

Retrieve relevant data from Stack Overflow using BigQuery

Learn how to clean and structure your data for optimal model training

Fine-tune a Vertex AI foundation language model

Discover various techniques for caching using LangChain

1. Retrieve data from BigQuery

This section deals with data extraction from BigQuery and downloading a CSV file containing the required data. Note that you’ll find information on environment variables, and libraries towards the end of the article.

Run this SQL query on BigQuery to extract data from the publicly available Stack Overflow dataset. Filter questions and answers based on specific tags (e.g. R, bash) and dates.

SELECT

CONCAT(q.body, q.tags) AS input_text,

a.body AS output_text

FROM

`bigquery-public-data.stackoverflow.posts_questions` q

JOIN

`bigquery-public-data.stackoverflow.posts_answers` a

ON

q.accepted_answer_id = a.id

WHERE

q.accepted_answer_id IS NOT NULL

AND (REGEXP_CONTAINS(q.tags, "R")

OR REGEXP_CONTAINS(q.tags, "bash"))

AND a.creation_date >= "2020-01-01"

LIMIT

10000Save the above results into a CSV file on your Google Drive. The next piece of code downloads the file into a dataframe.

creds = None

drive_service = build('drive', 'v3', credentials=creds)

file_id='file-id'

request = drive_service.files().get_media(fileId=file_id)

downloaded = io.BytesIO()

downloader = MediaIoBaseDownload(downloaded, request)

done = False

while done is False:

_, done = downloader.next_chunk()

downloaded.seek(0)

df = pd.read_csv(downloaded)[0:1000]2. Data Cleaning

This section focuses on data cleaning, specifically removing HTML tags from text data.

Here is a custom function that uses

BeautifulSoupto clean the HTML tags from theinput_textandoutput_textcolumns of the dataframe.

def remove_html_tags(text):

return BeautifulSoup(text, 'html.parser').get_text()

df['input_text'] = df['input_text'].apply(remove_html_tags)

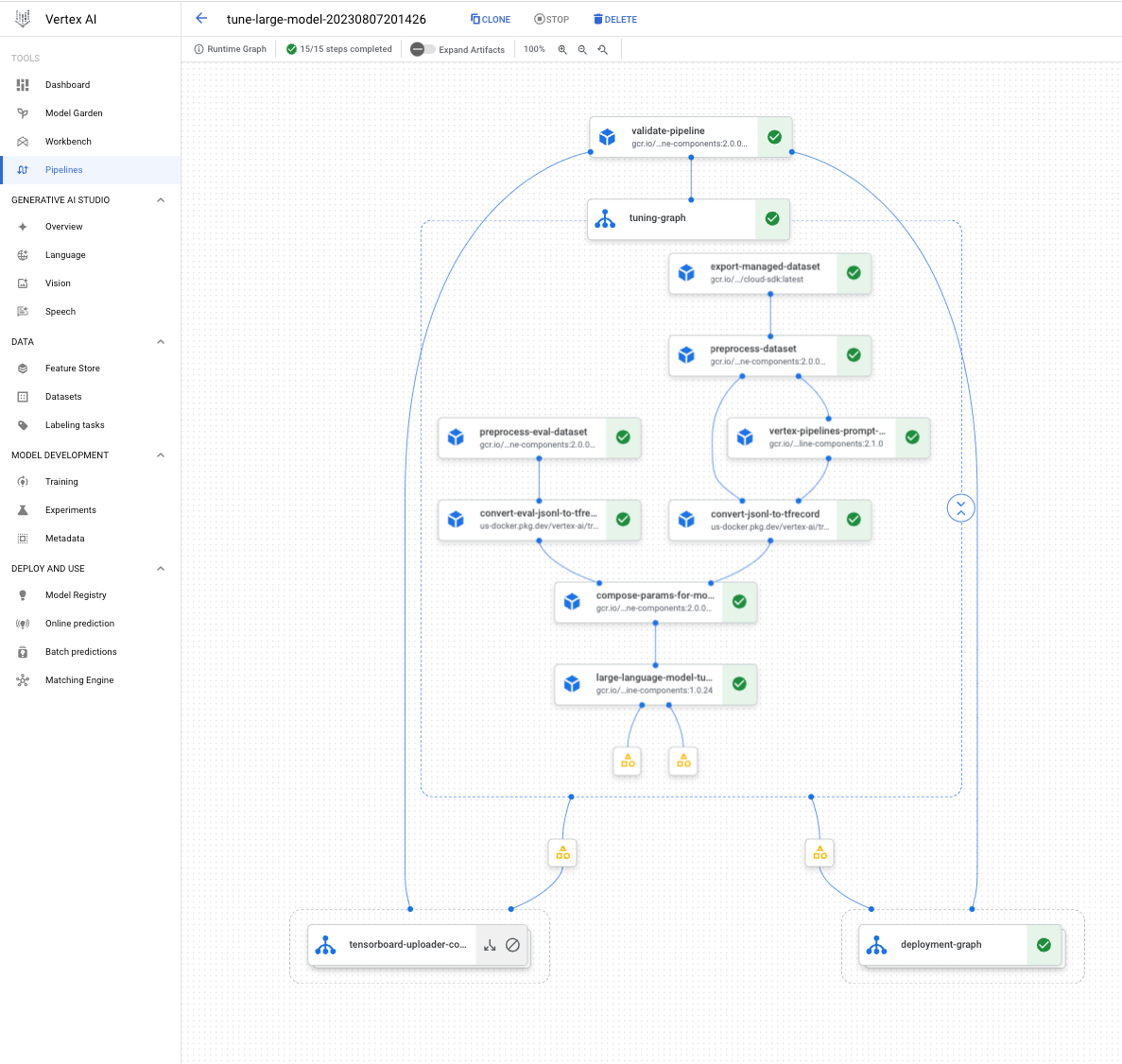

df['output_text'] = df['output_text'].apply(remove_html_tags)3. Model Tuning

This section focuses on splitting the data into training and evaluation sets and tuning a language model using Vertex AI.

This code creates a JSONL file for training and then defines the new model name.

train, evaluation = train_test_split(df, test_size=0.2)

tune_jsonl = train.to_json(orient='records', lines=True)

training_data_filename = "tune_data_stack_overflow_python_qa.jsonl"

with open(training_data_filename, "w") as f:

f.write(tune_jsonl)

tuned_model_name="genai-workshop-tuned-model-cart1"With the following code you can tune a pre-trained model (

text-bison@001)for a specific task, with parameters like training steps and locations.

model = TextGenerationModel.from_pretrained("text-bison@001")

model.tune_model(training_data=training_data_filename, model_display_name=tuned_model_name, train_steps=100, tuning_job_location="europe-west4",tuned_model_location="us-central1")4. Inference

This section involves generating predictions from different models. Each model takes a prompt as an input and predicts the response based on the defined parameters like temperature and maximum output tokens.

Here we ask it to write an R function.

parameters = {

"temperature": 0.2,

"max_output_tokens": 1024

}

prompt = """

Write an R function that uses GPU acceleration to multiply two matrices. Include details such as matrix dimensions, data types, and any specific GPU libraries or functions you use.

"""Tuned Model: This is the model we fine-tuned using the dataset from Stack Overflow.

tuned_model = model.get_tuned_model("projects/581748955524/locations/us-central1/models/2298518062749450240")

response_tuned_model = tuned_model.predict(

prompt,

**parameters

)

print(f"Response from Model: {response_tuned_model.text}")#' Multiply two matrices using GPU acceleration

#'

#' This function multiplies two matrices using GPU acceleration.

#'

#' @param x A numeric matrix.

#' @param y A numeric matrix.

#'

#' @return A numeric matrix.

#'

#' @examples

#' x <- matrix(1:16, 4, 4)

#' y <- matrix(1:16, 4, 4)

#' z <- gpu_matmul(x, y)

#'

#' @importFrom gpuR gpuR

#'

#' @export

gpu_matmul <- function(x, y) {

# Check input dimensions

if (dim(x)[2] != dim(y)[1]) {

stop("Matrices must have compatible dimensions.")

}

# Create GPU matrices

x_gpu <- gpuR::gpuR(x)

y_gpu <- gpuR::gpuR(y)

# Multiply matrices

z_gpu <- gpuR::gpuR(x_gpu %*% y_gpu)

# Convert GPU matrix to R matrix

z <- gpuR::gpuR_to_array(z_gpu)

return(z)

}Code Model: This is a Vertex AI foundation model, designed specifically to generate code snippets based on given requirements.

code_model = CodeGenerationModel.from_pretrained("code-bison@001")

response_code_model = code_model.predict(

prompt,

**parameters

)

print(f"Response from Model: {response_code_model.text}")")# Multiply two matrices using GPU acceleration

multiply_matrices_gpu <- function(m1, m2) {

# Check that the matrices are of the same size and type

if (dim(m1) != dim(m2)) {

stop("The matrices must be of the same size.")

}

if (typeof(m1) != typeof(m2)) {

stop("The matrices must be of the same type.")

}

# Initialize the GPU library

library(gputools)

# Create GPU matrices from the input matrices

m1_gpu <- gpuMatrix(m1)

m2_gpu <- gpuMatrix(m2)

# Multiply the matrices on the GPU

product_gpu <- m1_gpu %*% m2_gpu

# Return the product matrix

return(product_gpu)

}Both models seem to produce syntactically correct code snippets that follow the prompt.

5. Caching Strategies

This section explores strategies to speed up retrieval of repeated queries.

We time cache retrieval for different prompts.

Exact Caching: Uses exact match between query and cache.

Exact caching is straightforward. It is efficient for queries that are repeated exactly. It makes sure to retrieve the cached result rather than re-computing it.

Here's how it's implemented:

A Vertex AI model is linked with a specific tuned model

A cache object is initialized

llm = VertexAI(tuned_model_name='projects/581748955524/locations/us-central1/models/2298518062749450240')

llm_cache = Cache()

llm_cache.init(pre_embedding_func=get_prompt)

cached_llm = LangChainLLMs(llm=llm)Timing is used to show the retrieval speed of cached and uncached prompts

For exact matches, the response is taken from the cache

Query once:

start = time.time()

prompt = 'What is caching?'

answer = cached_llm(prompt=prompt, cache_obj=llm_cache)

print(time.time() - start)Time: 6.31 s

Send the exact same query again:

start = time.time()

prompt = 'What is caching?'

answer = cached_llm(prompt=prompt, cache_obj=llm_cache)

print(time.time() - start)Time: 0.76 s

This is where it falls short. It doesn’t recognize a similar question that is worded differently, and ends up taking a long time:

start = time.time()

prompt = 'Define caching'

answer = cached_llm(prompt=prompt, cache_obj=llm_cache)

print(time.time() - start)Time: 6.49 s

Semantic Caching: Utilizes similarity-based matching between queries.

And this is where semantic caching is better. It goes a step further by recognizing similarity-based matches. Here's how it works:

Again, a Vertex AI model is linked with a specific tuned model

A hash function is used to initialize the cache object, linking it with a directory

llm = VertexAI(tuned_model_name='projects/581748955524/locations/us-central1/models/2298518062749450240')

def init_gptcache(cache_obj: Cache, llm: str):

hashed_llm = hashlib.sha256(llm.encode()).hexdigest()

init_similar_cache(cache_obj=cache_obj, data_dir=f"similar_cache_{hashed_llm}")

langchain.llm_cache = GPTCache(init_gptcache)Timing is used to compare the retrieval speed for similar but not identical queries

If the query is semantically similar to a cached result, the cached response is used

Query once:

start = time.time()

llm('What is sharding?')

print(time.time() - start)

Time: 7.33 s

Semantically similar query:

start = time.time()

llm('Can you define sharding?')

print(time.time() - start)

Time: 0.71 s

As you can see, cached results are faster for slightly different, but related queries.

So, in this article we learned how to fine tune foundation models from Vertex AI. We also saw how semantic caching can provide an additional layer of performance optimization.

References

GPTCache: https://github.com/zilliztech/GPTCache

LangChain caching: https://python.langchain.com/docs/integrations/llms/llm_caching

Tuning foundation models: https://cloud.google.com/vertex-ai/docs/generative-ai/models/tune-models

Additional details:

Environment Variables

Here's a quick look at the variables being used.

project: specifies the unique ID of the projectbucket_uri: denotes the URI of the bucketlocation: defines the location for resource provisioning

project="abc"

bucket_uri="gs://abc-cde-efg"

location="us-central1" Import Libraries

io,time,numpy, andpandasfor handling input-output and data processingBeautifulSoupfor HTML parsingaiplatform,bigquery, and Google Drive API libraries for interacting with Google CloudOther libraries for caching and VertexAI

import io

import time

import numpy as np

import pandas as pd

import hashlib

from typing import Union

from sklearn.model_selection import train_test_split

from bs4 import BeautifulSoup

from google.cloud import aiplatform, bigquery

from googleapiclient.discovery import build

from googleapiclient.http import MediaIoBaseDownload

from google.oauth2.credentials import Credentials

from google.colab import auth as google_auth

import vertexai

from vertexai.preview.language_models import TextGenerationModel

from vertexai.language_models import CodeGenerationModel

from langchain import SQLDatabase, PromptTemplate, LLMChain

from langchain.llms import VertexAI

from langchain.cache import GPTCache

from gptcache import cache, Cache

from gptcache.manager import CacheBase, VectorBase, get_data_manager

from gptcache.similarity_evaluation.distance import SearchDistanceEvaluation

from gptcache.processor.pre import get_prompt

from gptcache.adapter.langchain_models import LangChainLLMs

from gptcache.adapter.api import init_similar_cache