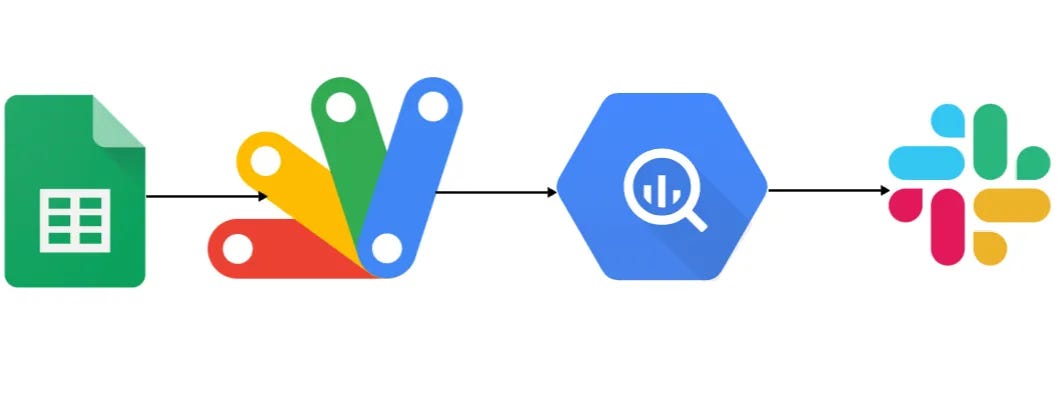

Data Sync Between Google BigQuery and Google Sheet

As a data engineer, one of the frequent tasks you might encounter is syncing data from a Google Sheet to a Google BigQuery table.

This process, while seemingly straightforward, introduces a set of challenges that can complicate maintaining data integrity and consistency

Append-Only Nature of BigQuery: BigQuery is designed primarily for appending data, not updating or deleting specific rows.

Deletions Aren’t Synced: Similarly, if you remove a row from your spreadsheet, this deletion won’t propagate to BigQuery.

Schema Changes Are Restricted: Modifying the structure of your Google Sheet presents another layer of complexity. While BigQuery allows for some schema modifications, these are limited.

In this blog, we will explore an App Script-based strategy that addresses these challenges head-on.

Our approach involves three main steps:

Download Sheet to a CSV

The first step involves exporting the Google Sheet data to a CSV format. Here’s how:

function exportSheetToCsv(sheetId, sheetName) {

var url = `https://docs.google.com/spreadsheets/d/${sheetId}/export?format=csv&name=${encodeURIComponent(sheetName)}`;

const options = { headers: { 'Authorization': 'Bearer ' + ScriptApp.getOAuthToken() } };

const response = UrlFetchApp.fetch(url, options);

const blob = response.getBlob();

return blob;

}

This function fetches the specified Google Sheet as a CSV, ready for upload to BigQuery.

Delete the Table from BigQuery

Remove the existing BigQuery table to address the append-only and deletion-sync issues.

To ensure our BigQuery dataset reflects the latest data structure and content from Google Sheets, we’ll delete the existing table:

function deleteBigQueryTable(projectId, datasetId, tableId) {

try {

BigQuery.Tables.remove(projectId, datasetId, tableId);

console.log('Table deleted successfully.');

} catch (e) {

console.log(`Error deleting table: ${e.message}`);

}

}Upload the CSV to BigQuery as a New Table

Create a fresh table in BigQuery with the same name, ensuring it reflects the current state of the Google Sheet.

With the table deleted, we upload the new data:

function uploadCsvToBigQuery(blob, projectId, datasetId, tableId) {

const file = DriveApp.createFile(blob);

const data = file.getBlob().setContentType('application/octet-stream');

const job = {

configuration: {

load: {

destinationTable: { projectId, datasetId, tableId },

sourceFormat: 'CSV',

autodetect: true,

skipLeadingRows: 1

}

}

};

BigQuery.Jobs.insert(job, projectId, data);

console.log('CSV uploaded and table created in BigQuery.');

}Send an Update Notification

Optionally, send a notification (e.g., via Slack) to inform stakeholders that the data sync has been completed.

function sendSlackNotification(webhookUrl, message) {

const payload = JSON.stringify({ text: message });

const options = {

method: 'post',

contentType: 'application/json',

payload: payload

};

UrlFetchApp.fetch(webhookUrl, options);

}Automating Data Sync with Time-Driven Triggers

To automate the data syncing process from Google Sheets to BigQuery, you can use Google Apps Script’s time-driven triggers. These triggers can run your data sync script at regular intervals — ranging from every minute to once a month.

Stitch it all together, and you get something like this:

function loadCsv() {

var url = 'https://docs.google.com/spreadsheets/d/' + 'sheet_ID' + '/export?format=csv&name=' + encodeURIComponent('sheet_name');

const options = {

headers: {

'Authorization': 'Bearer ' + ScriptApp.getOAuthToken()

}

};

const response = UrlFetchApp.fetch(url, options);

const currentDate = new Date();

const dateString = currentDate.toISOString().split('T')[0].replace(/-/g, '');

const blob = response.getBlob().setName('sheet_' + dateString + '.csv');

const exportedFile = DriveApp.createFile(blob);

const projectId = 'project_id';

const datasetId = 'dataset_id';

const csvFileId = exportedFile.getId();

const tableId = 'table_id'

// Delete existing table

try {

BigQuery.Tables.remove(projectId, datasetId, tableId);

console.log('Table deleted.');

} catch (e) {

console.log('Table deletion failed or table did not exist. Error: ' + e.message);

}

// Create new table with the same name

var table = {

tableReference: {

projectId: projectId,

datasetId: datasetId,

tableId: tableId

}

};

// Upload table to BigQuery

table = BigQuery.Tables.insert(table, projectId, datasetId);

console.log('Table created: %s', table.id);

const file = DriveApp.getFileById(csvFileId);

const data = file.getBlob().setContentType('application/octet-stream');

const job = {

configuration: {

load: {

destinationTable: {

projectId: projectId,

datasetId: datasetId,

tableId: tableId

},

skipLeadingRows: 1,

autodetect: true,

sourceFormat: 'CSV'

}

}

};

const jobResult = BigQuery.Jobs.insert(job, projectId, data);

console.log('Load job started. Status: ' + jobResult.status.state);

// Send a Slack

sendSlackNotification(webhookUrl, message)

}This solution ensures that your BigQuery dataset remains up-to-date with your Google Sheets, including reflecting any updates, deletions, or schema changes.

Feel free to customize my script based on your specific requirements!